Multi-Team Review

Multi-Team Review

Play Description

Pattern Summary

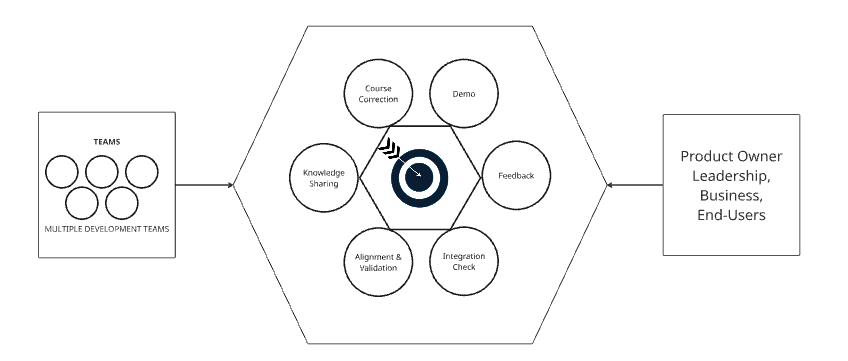

The Multi-Team Review is a combined review of a product, service or solution by multiple teams to obtain feedback on the product and ways to improve it. Multi-Team Review increases transparency, alignment and continuous improvement at the end of an iteration or increment as teams showcase their completed work and demonstrate product features, rather than deliver presentations. This review is crucial for validating system-wide integration, gathering stakeholder feedback, and ensuring development stays aligned with business goals and customer needs.

Related Patterns

Multi-Team Planning

Symptom Categories

Lack of Alignment Across Teams, Poor System Integration & Quality Issues, Limited Transparency & Feedback Loops, Ineffective Course Correction & Risk Management, Reduced Team Collaboration & Knowledge Sharing, Problem of determining progress and status

Symptoms Addressed

- Lack of Alignment Across Teams

When teams work in silos, the work they perform does not align with shared business or product goals. - Poor System Integration & Quality Issues

Components developed by individual teams work independently but fail when combined, causing system-wide issues and inconsistencies. - Limited Transparency & Feedback Loops

Stakeholders and teams lack visibility into progress, delaying feedback and validation of requirements. - Ineffective Course Correction & Risk Management

Risks, technical debt, and misaligned features go unnoticed until it’s too late. - Reduced Team Collaboration & Knowledge Sharing

Teams miss opportunities to learn from each other, causing duplicate work, mismatched redundancy, and conflicting implementations, while also making it harder to maintain continuity when team members change. - Problem of Determining Progress and Status

Lack of a clear, real-time view of overall progress leads to uncertainty about the system’s true status, making it difficult to assess integration, alignment, and delivery readiness.

Detailed Description

The Multi-Team Review is a combined review of a product, service or solution by multiple teams to obtain feedback on the product and ways to improve it. Multi-Team Review increases transparency, alignment and continuous improvement at the end of an iteration or increment as teams showcase their completed work and demonstrate product features, rather than deliver presentations. This review is crucial for validating system-wide integration, gathering stakeholder feedback, and ensuring development stays aligned with business goals and customer needs.

In this pattern, multiple teams working on different components or features of a system come together at the end of an iteration or increment to showcase their completed work. The primary objectives of the review are to:

- Demonstrate progress: Teams present their working software or system increment to stakeholders, product owners, and other teams.

- Gather feedback: Stakeholders provide real-time feedback to ensure the development aligns with business needs and customer expectations.

- Ensure integration quality: The review helps validate how well different components integrate and function as a cohesive system.

- Improve collaboration: Teams gain visibility into each other’s work, fostering knowledge sharing and identifying dependencies or gaps.

The Multi-Team review is often time-constrained (time-boxed) and structured to focus on functionality rather than presentations, emphasizing working product over slides. Stakeholder and team feedback gathered during the review provides valuable insights into user needs, technical challenges, and business priorities. The results of the review inform future iterations, backlog refinements, and necessary course corrections to maintain alignment with overall business goals.

This pattern plays a critical role in scaled agile environments by enhancing transparency, accelerating learning cycles, and ensuring that the developed system meets business and customer expectations through continuous feedback and adaptation.

In Frameworks

Different Agile frameworks provide structured approaches to Multi-Team Review, each aligning with specific review methods:

Scaled Agile Framework (SAFe):

In SAFe, the Multi-Team Review is implemented as the System Demo, which occurs at the end of every iteration (Sprint) and Planning Interval (PI) to validate system-wide integration. Each team within the ART demonstrates their completed features in a real environment, ensuring that multiple components work together as intended. Unlike individual team reviews, the System Demo in SAFe is a cross-team, enterprise-level event focused on end-to-end functionality rather than isolated team outputs.

Scrum@Scale:

In Scrum@Scale, the event analogous to a Multi-Team Review is referred to as the Scaled Sprint Review, which is an optional event depending on the organization’s needs. The Scaled Sprint Review focuses on the integration of features from various Scrum teams, aiming to provide transparency, promote cross-team collaboration, and ensure alignment with business priorities. When used, it typically occurs at the end of each Sprint and serves as a mechanism for inspecting the progress of the entire product increment, rather than individual team outputs.

Nexus:

The Multi-Team Review in Nexus takes place at the end of each Sprint and serves as an opportunity for all Scrum teams within the Nexus to present their incremental contributions. Unlike individual team demos, the Multi-Team Review brings together all teams’ work to ensure the full product increment is functioning as expected and aligns with the product goals. It is a live demonstration of the integrated work, showcasing how the different teams’ efforts fit together into the complete system.

Extreme Programming (XP):

XP’s approach to the Multi-Team Review is built around its core principles of continuous feedback, communication, and simplicity. This event typically takes place at the end of each iteration, with teams showcasing the fully integrated system to stakeholders. Rather than focusing on isolated features, XP’s Multi-Team Review emphasizes the seamless integration of working code, enabling teams to demonstrate the value delivered in the most efficient, functional form.

Product Operating Model (POM):

In the Product Operating Model, the Multi-Team Review is not a fixed event but is integrated into cross-functional collaboration workflows. In the Squad and Tribe Model, reviews happen at the tribe level, where squads align on progress and integration. Feature-based teams conduct cross-team reviews to ensure seamless product integration, while the Triad Model (Product Manager, Designer, Engineering Lead) enables continuous strategic and technical alignment through regular check-ins. POM adapts review mechanisms to fit organizational needs, focusing on continuous feedback, decentralized decision-making, and alignment across autonomous teams.

How to Use:

-

Timing & Frequency

-

The Multi-Team Review takes place at the end of an iteration or increment (e.g., sprint, Planning Interval).

-

It is a time-boxed event, ensuring focused discussions and efficient execution.

-

-

Participants

-

Development Teams: All teams working on different components of the system.

-

Stakeholders: Product owners, business representatives, end-users, and leadership.

-

Facilitator (optional): A Scrum Master, Release Train Engineer, or Agile Coach may help structure the session.

-

-

Format & Structure

- Live Demonstration: Teams showcase their completed, working features instead of using slide decks or theoretical discussions.

- Integration Focus: The demo validates how well different components work together in a cohesive system.

- Stakeholder Feedback: Stakeholders provide insights, ensuring that development aligns with business priorities and customer needs.

Do Not Use This Pattern When

While the Multi-Team Review is highly effective in ensuring integration and alignment across multiple teams, there are scenarios where it may not be the best approach:

- Limited Scale or Single-Team Projects: When only one or very few teams are involved, or when work is already tightly integrated within a single team, a traditional team-level review is often sufficient.

- Isolated, Non-Integrated Work: If teams are working on independent components with minimal dependencies, the overhead of coordinating a multi-team demo may not provide additional value.

- Excessive Coordination Overhead: When the process of organizing a multi-team review becomes overly complex and resource-intensive compared to the benefits it delivers, alternative review methods may be more effective.

- Limited Stakeholder Engagement Needs: If the product or project doesn’t require extensive cross-functional feedback—perhaps due to a more focused or niche scope—a full-scale multi-team review might be more than what’s necessary.

- Frequent Deliveries with Continuous Deployment: When teams release features continuously through A/B testing and automated deployments, real-time validation replaces the need for an end-of-sprint demo, making a structured multi-team review redundant.

- Delayed Feedback in Fast-Paced Environments: When teams operate in a fast-paced development cycle, waiting for a large-scale demo can slow down decision-making and hinder the ability to quickly adapt to changes.

These considerations help ensure that the chosen review method aligns with the team’s size, integration needs, development stage, and overall organizational context.

Use When…

Advantages

- Cross-Team Alignment: It fosters a shared understanding and alignment across multiple teams, ensuring that all components integrate seamlessly.

- Enhanced Transparency: Stakeholders gain full visibility into the system’s progress, helping them see how individual pieces come together.

- Early Issue Detection: Integration issues and dependencies are identified early, which minimizes the risk of costly rework later.

- Effective Stakeholder Feedback: Real-time feedback from product owners, business stakeholders, and end-users helps refine and align future work.

- Improved Collaboration: By bringing teams together, it encourages knowledge sharing and better coordination, reducing duplicated efforts.

- Rapid Course Correction: Regular demos allow teams to adjust priorities and address issues promptly, keeping development aligned with business goals.

- Validation in Early-Stage Development: Multi-Team Reviews help surface key challenges, validate technical decisions, and align teams before scaling further.

Disadvantages

- Coordination Overhead: Organizing and synchronizing multiple teams can be time-consuming and require significant planning.

- Resource Intensity: The effort needed to prepare and execute a system demo can be high, especially if teams are not already well-coordinated.

- Potential for Diluted Focus: With many teams presenting simultaneously, the demo can become overwhelming or unfocused, making it difficult to dive deep into specific issues.

- Limited Value in Small-Scale Projects: For projects involving only one or a few teams, the benefits of a multi-team review may not justify the extra effort.

- Complexity in Execution: Ensuring that all teams’ contributions are effectively integrated and demonstrated can be challenging, particularly in early project phases or when dependencies are not well-managed.

Additional Notes

Bibliography

Scaled Agile Framework (2024). System Demo. Retrieved from https://framework.scaledagile.com/system-demo

Scrum@Scale (2024). Scrum@Scale Guide. Retrieved from https://www.scrumatscale.com/scrum-at-scale-guide/

Scrum.org (2015). Sprint Review with Multiple Teams Developing One Product. Retrieved from https://www.scrum.org/forum/scrum-forum/5354/sprint-review-multiple-teams-developing-one-product

Scrum.org (2024). Nexus Guide. Retrieved from https://www.scrum.org/resources/nexus-guide

Beck, K. (2004). Extreme Programming Explained: Embrace Change (2nd ed.). Addison-Wesley.

Extreme Programming.org (n.d.). Extreme Programming Practices. Retrieved from https://www.extremeprogramming.org

Agile Alliance (n.d.). XP - Extreme Programming. Retrieved from https://www.agilealliance.org/glossary/xp

ThoughtWorks (2022). Building a Modern Digital Product Operating Model. Retrieved from https://www.thoughtworks.com/insights/articles/building-modern-digital-product-operating-model

Fowler, M. (2017). Product Mode. Retrieved from https://martinfowler.com/articles/product-mode.html

PandaDoc (n.d.). How to Run a Successful Multi-Team Sprint Review. Retrieved from https://bamboo.pandadoc.com/issue002/how-to-run-a-successful-multi-team-sprint-review

Labunskiy, E. (2017). Multi-Team Sprint Review – How Do We Do It?. Retrieved from https://www.linkedin.com/pulse/multi-teams-sprint-review-how-do-we-evgeniy-labunskiy/

Beck, K., & Andres, C. (2004). Extreme Programming Explained: Embrace Change (2nd ed.). Addison-Wesley.

Agile Manifesto (2001). Manifesto for Agile Software Development. Retrieved from https://agilemanifesto.org

Continuous Delivery (n.d.). Continuous Delivery and Integration. Retrieved from https://continuousdelivery.com

Product Operating Models: How Top Companies Work Retrieved from https://productschool.com/blog/product-strategy/product-operating-model